Hi,

I’m fairly new to wandb (and AI in general). I’m currently working with a simple script that runs a ResNet18 for image classification purposes. I’ve been adapting a script that originally logged many infos (train_epoch_loss, valid_accuracy, etc.) and with which I was able to customize the different columns into the project runs panel.

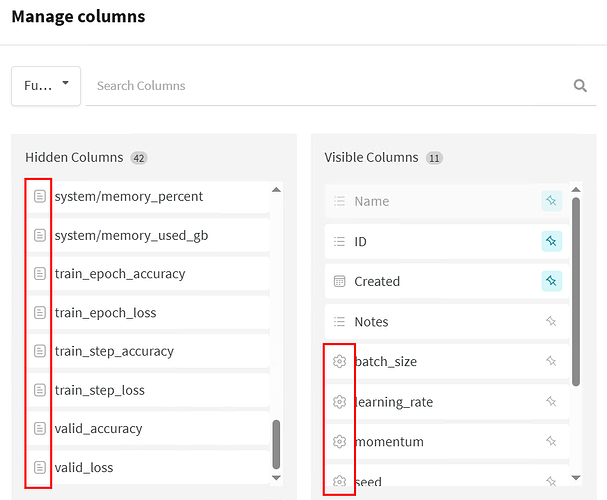

However, after trying really hard to find a way to track GPU usage, I ended up changing the script quite a bit. Everything works fine but now I am unable to customize most of the columns (they stay in the hidden columns no matter what). I have noticed that the icon next to the column name is not the same when I cannot add it to the visible ones (see example below). What am I doing wrong ?

I am also including an example of the way I pass the infos in my script:

# Configuration WandB

config_dict = {

"batch_size": self.config.batch_size,

"learning_rate": self.config.learning_rate,

"momentum": self.config.momentum,

"seed": self.config.seed,

"epochs": self.config.epochs

}

# Ajout des informations GPU si disponible

if torch.cuda.is_available():

config_dict.update({

"gpu_name": torch.cuda.get_device_name(0),

"gpu_memory_gb": torch.cuda.get_device_properties(0).total_memory / 1024**3,

"cuda_version": torch.version.cuda,

"pytorch_version": torch.__version__

})

wandb.config.update(config_dict)

...

# Log WandB par epoch avec métriques GPU détaillées

epoch_metrics = {

"train_epoch_accuracy": 100. * train_acc,

"train_epoch_loss": train_loss,

"valid_accuracy": 100. * valid_acc,

"valid_loss": valid_loss,

"epoch": epoch

}

# Ajout des métriques GPU de fin d'epoch

if torch.cuda.is_available():

epoch_metrics.update({

"gpu/memory_allocated_epoch_end": torch.cuda.memory_allocated() / 1024**3,

"gpu/memory_reserved_epoch_end": torch.cuda.memory_reserved() / 1024**3,

"gpu/memory_max_allocated_epoch": torch.cuda.max_memory_allocated() / 1024**3

})

if wandb.run is not None:

wandb.log(epoch_metrics)