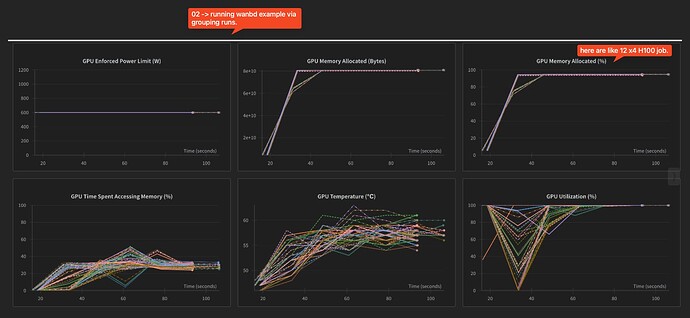

Is there any way to configure Weights and Biases when using multi-node with axolotl. What are the best practices for logging distributed training .

Situation: axolotl only see the main communication node. axolotl config wandb part:

wandb_project: gemma-3-cpt-pretraining

wandb_entity:

wandb_watch:

wandb_name: gemma-3-4b-cpt-100k

wandb_log_model:

resource: [Log distributed training experiments](Log distributed training experiments | Weights & Biases Documentation "Log distributed training experiments

(Log distributed training experiments | Weights & Biases Documentation)")